Seedance 2.0 Review: Is ByteDance’s New AI Model the Best Video Generator of 2026?

Date: February 9, 2026

Category: AI Tools / Video Production

Verdict: ⭐⭐⭐⭐⭐ (4.8/5)

The landscape of AI video generation has shifted dramatically in early 2026. While OpenAI’s Sora and Google’s Veo have captured headlines for verified physics, a new contender from ByteDance has quietly reshaped the expectations of creators.

Seedance 2.0 represents a significant leap forward in multimodal AI. It is not just an image animator. It is a comprehensive virtual director. Early adopters and filmmakers testing the beta version suggest this tool solves the biggest problem in generative video: consistency.

This review analyzes feedback from the creator community to determine if Seedance 2.0 is truly the industry disruptor many claim it to be.

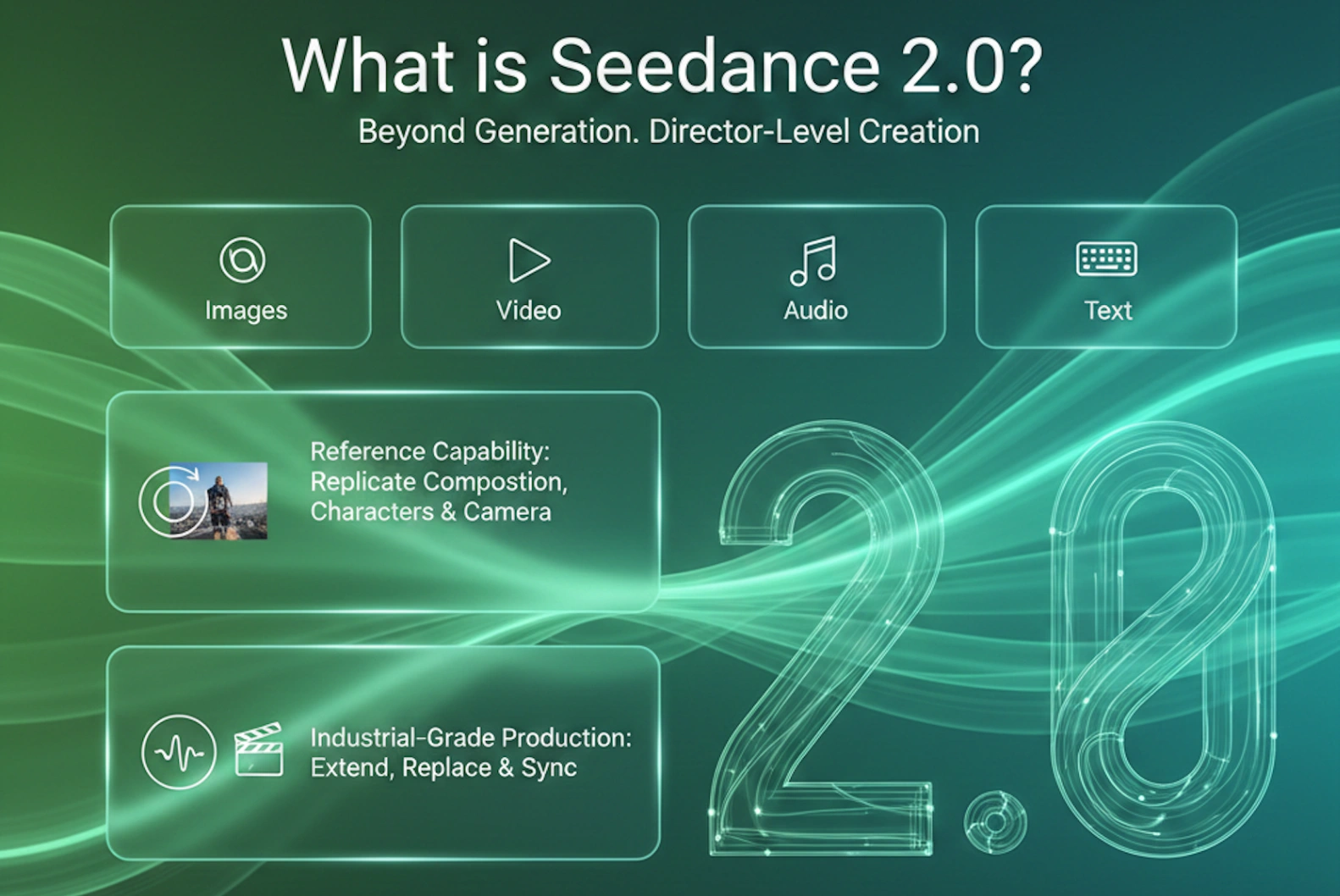

What is Seedance 2.0?

Seedance 2.0 is the latest video generation model developed by ByteDance, the parent company of TikTok. Unlike its predecessors, this model focuses on controllability. It allows users to input text, multiple reference images, video clips, and audio files simultaneously.

The goal is to move beyond random slot-machine generation. Seedance 2.0 aims to provide precise control over character appearance, camera movement, and pacing. It supports resolutions up to 2K and includes native audio generation features.

The "Director Mode" Revolution

The most common praise among early testers is the feeling of being a director rather than a prompter. Users highlight three distinct advantages that set Seedance 2.0 apart from competitors like Kling or Runway.

1. Multi-Shot Consistency

The holy grail of AI video has always been character consistency. Previous models often morphed faces or changed clothing between shots.

Reviewers note that Seedance 2.0 excels here. By allowing users to upload up to nine reference images, the model locks onto character identity. You can generate a close-up of a character speaking and then cut to a wide shot of the same character walking down a street without losing their likeness. Filmmakers report that this feature alone makes narrative storytelling viable for the first time.

2. Professional Pacing and Editing

Most AI videos feel like slow-motion dreams. Seedance 2.0 breaks this pattern.

Creators have shared demonstrations where the AI understands film grammar. It can execute sharp cuts, follow complex camera directions like "dolly zoom", and manage pacing that feels intentional. One filmmaker noted that the model handles shot transitions with a level of sophistication that typically requires a human editor.

3. Native Audio and Lip-Sync

Audio is no longer an afterthought. Seedance 2.0 generates video and audio in a single pass.

While not perfect, the lip-sync capabilities are reportedly impressive. Characters speak with correct phoneme matching in multiple languages. Furthermore, the model generates ambient sound designs, such as wind, traffic, or footsteps, that match the visual action perfectly. This integration saves creators hours of post-production work.

The Workflow: From "Prompts" to "Production"

The user experience has received high marks for its flexibility. The "Keep Shooting" workflow allows creators to extend clips indefinitely.

If a generated 5-second clip is perfect, users can extend it for another 10 seconds while introducing new elements or changing the camera angle. This capability allows for the creation of longer, cohesive scenes. Some users claim to have produced short films in under 30 minutes, a process that would traditionally take days or weeks.

The consensus is clear: ByteDance is shipping features faster than many Western competitors. The ability to iterate quickly and reference existing assets makes the tool feel like a professional editing suite.

Expert Insight: "The Childhood of AIGC is Over"

The impact of this technology extends beyond casual users. Feng Ji, the producer behind the global hit Black Myth: Wukong, recently offered a stark assessment of the new model, declaring that "the childhood era of AIGC has ended".

In his view, Seedance 2.0 is currently the undisputed leader, described as the "strongest video generation model on Earth" with no close seconds. He highlights that the model’s ability to integrate multimodal information (text, art, shadow, and sound) represents a complete technological leap that is immediately apparent once you experience its low barrier to entry.

However, he also predicts that Seedance 2.0 will trigger a massive restructuring of the industry. As video production costs plummet to equal the marginal cost of computing power, we will likely see unprecedented content inflation and the collapse of traditional production workflows. While this shift will democratize video for e-commerce and potentially evolve into new forms of "gamified" entertainment, it brings severe risks.

With Seedance 2.0 making hyper-realistic video generation accessible to everyone, a crisis of trust is inevitable. His urgent advice is clear: warn your families that any unverified video content, particularly those featuring personal likenesses or voices, can now be easily forged and must be cross-verified to avoid deception.

The Critical Takes: It Is Not Perfect Yet

Despite the enthusiasm, objective reviews highlight several limitations and concerns.

Technical Limitations:

- Prompt Sensitivity: The model requires precise cinematic language. Vague prompts yield generic results.

- Physics Glitches: While character consistency is high, complex object interactions can still fail. Hands holding cups or characters interacting with water occasionally result in visual errors.

- Clip Length: The base generation is often capped at around 15 seconds. While extensions are possible, generating long-form content still requires stitching multiple clips together.

Industry Concerns: The sheer quality of the output has sparked anxiety among creative professionals. One viral review from a film director expressed genuine fear regarding the tool. The argument is that Seedance 2.0 could eliminate entry-level jobs in editing, VFX, and sound design. The ability to generate production-ready scenes from a simple script raises questions about the future value of traditional filmmaking skills.

Comparison: Seedance 2.0 vs. The Market

How does it stack up against the competition?

| Feature | Seedance 2.0 | Sora (OpenAI) | Kling 3.0 |

|---|---|---|---|

| Character Consistency | Excellent | Good | Medium |

| Audio Generation | Native & Synced | Limited | Basic |

| Camera Control | High (Director Mode) | Medium | Medium |

| Realism | Cinematic | Hyper-realistic | Stylized |

The general verdict from the community is that while Sora may have a slight edge in raw physics simulation, Seedance 2.0 wins on workflow and storytelling capability.

Final Verdict

Seedance 2.0 is more than just a viral trend. It represents a maturation of AI video technology.

For marketers, content creators, and independent filmmakers, this tool offers an unprecedented level of creative freedom. It effectively lowers the barrier to entry for high-quality video production. However, it also serves as a wake-up call for the industry regarding the rapid automation of creative tasks.

Pros:

- Unmatched control over character consistency.

- Integrated audio and video generation.

- Fast generation times and high resolution.

Cons:

- Steep learning curve for optimal prompting.

- Potential legal and ethical concerns regarding deepfakes.

- Currently limited availability in some regions.

If you are serious about AI video, Seedance 2.0 is currently the tool to beat in 2026.

Stay updated on the latest AI tools and tutorials by bookmarking our tech review section.