Seedance 2.0 Release: ByteDance’s Leap into Industrial-Grade AI Video Generation

Date: February 12, 2026

Author: Jsam (AI Expert)

Overview

The landscape of AIGC (Artificial Intelligence Generated Content) is evolving at a breakneck pace. If previous iterations of AI video tools were considered high-end "toys" for early adopters, the release of Seedance 2.0 by ByteDance on February 7, 2026, marks a definitive shift toward "industrial certainty".

Moving away from the "lottery ticket" style of generation (where users pray for a good result), Seedance 2.0 introduces a paradigm of control and consistency. It represents a significant milestone in the domestic AI landscape, often called China’s "Sora 2 Moment", signaling that AI video is ready to transition from technical demos to actual production workflows.

The release of Seedance AI 2.0 has triggered a massive reaction across the global tech and creative communities. Elon Musk commented on the rapid acceleration of the technology, noting simply that "things are moving fast", while Justine Moore, a partner at a16z, remarked that the "Turing test for AI video has been conquered". Domestically, Feng Ji, CEO of Game Science (creators of Black Myth: Wukong), declared that "The childhood of AIGC is over", praising the model as the strongest on the surface. Even acclaimed film director Jia Zhangke confirmed his intent to use the Seedance 2.0 AI video tool for future film production, validating its cinematic potential.

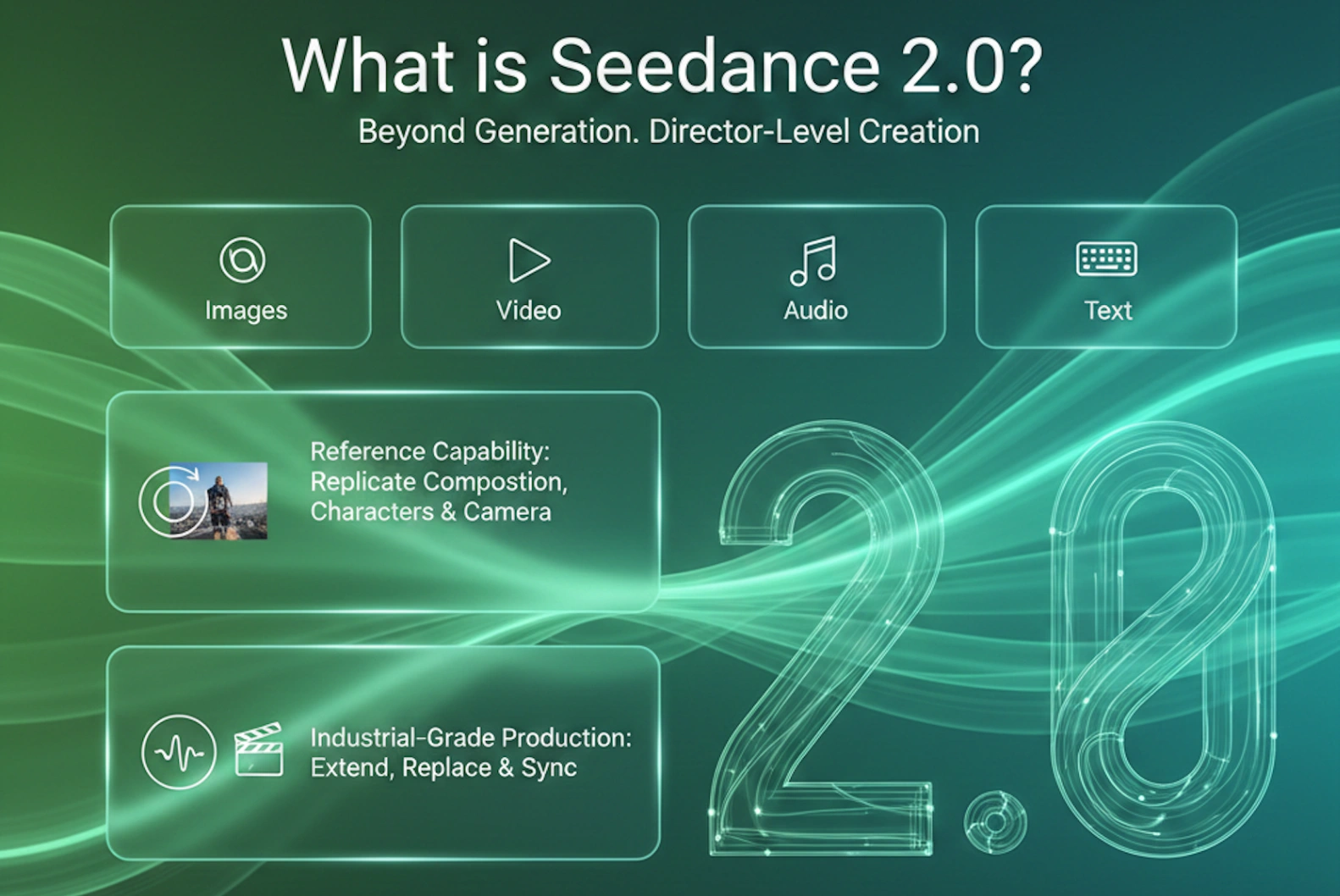

What is Seedance 2.0?

Seedance 2.0 is ByteDance’s latest flagship video generation model. Unlike its predecessors that relied primarily on text-to-video, Seedance 2.0 is built on a robust Unified Multi-Modal Audio-Video Joint Generation Architecture. It can simultaneously process and synthesize Images, Videos, Audio, and Text.

The core philosophy behind this model is "Directorial Control". It allows creators to input a massive combination of assets to guide the generation process. Specifically, the model supports up to 9 images, 3 video clips, 3 audio tracks, and natural language prompts simultaneously. This allows a user to provide a specific face (image), a specific camera movement (video), and a specific rhythm (audio), and the model will fuse them into a coherent new 4-to-15-second video. It is currently integrated into the Jimeng (Dreamina) ecosystem, Doubao, and the Volcano Ark experience center.

Core Capabilities & Features

Seedance 2.0 is not just about higher resolution; it is about workflow integration. Here are its standout features enriched by recent benchmark data:

1. The "Reference" Revolution

The most significant update is the "Reference Generation" capability, which breaks the boundaries of material usage.

- Camera & Motion Copying: Users can upload a reference video, and Seedance 2.0 will replicate the camera language (pan, tilt, zoom) and action rhythm without copying the original subject matter.

- Character Locking: By uploading reference photos, the model maintains character identity across different shots.

- Storyboarding to Video: In reported tests, the model successfully interpreted "Reference Image 1" as a storyboard script, "Reference Image 2" as the character, and "Reference Image 3" as the environment, synthesizing them into a 15-second "healing" short film that strictly followed the visual narrative.

2. Multi-Modal Synergy & Dual-Channel Audio

The Seedance AI 2.0 model supports a mix of inputs with a major upgrade in audio engineering:

- Dual-Channel Immersive Audio: Unlike mono-track predecessors, Seedance 2.0 generates dual-channel stereo sound. It supports multi-track parallel output including background music, ambient noise, and voiceovers.

- Foley-Grade Synchronization: In "ASMR" specific tests, the model accurately synchronized sound with micro-movements, such as the scratching of frosted glass, the rubbing of fabric, or the tapping of acrylic, creating a highly immersive audiovisual experience where the sound dictates the visual rhythm.

3. Advanced Editing & Logic

Seedance Video 2.0 offers "In-Video Editing" and "Video Extension", allowing users to:

- Targeted Modification: Replace specific elements (e.g., changing a character or an object) within an existing video while preserving the original motion and environment.

- Seamless Extensions: Use the "Keep Shooting" function to extend clips beyond the initial 15 seconds. For instance, a user can prompt a character to ride a horse to a tree, pick a flower, and then extend the video to have him present that flower to another character, maintaining perfect narrative and lighting continuity.

Key Improvements over Previous Generations

Based on internal beta testing and official showcases, Seedance 2.0 has achieved several qualitative leaps:

- Complex Motion & Physics (SOTA): The model excels in complex interaction scenes. In a Figure Skating benchmark, the model accurately rendered two athletes performing synchronized jumps, mid-air rotations, and landings. Crucially, it adhered to physical laws. When the male skater slightly lost balance, the recovery of gravity and the interaction with the ice surface were physically accurate, avoiding the "floating" or "glitching" often seen in AI video.

- Bridging the Consistency Gap: The "limitations on human portraiture" have been largely removed. The model shows a deep understanding of complex textures and distinct facial features, maintaining identity stability even during dynamic movement.

- Instruction Following: The precision of prompt adherence has increased significantly. If a user directs a specific sequence of events, the model executes it with higher fidelity than previous iterations.

Technical Breakthroughs

Under the hood, Seedance 2.0 leverages a sophisticated architecture that balances quality with efficiency.

- Unified Architecture: By adopting a unified multi-modal structure, Seedance 2.0 solves the problem of "long-term consistency". This allows for the "Living Photo" effect, where a static family portrait can be animated into a dynamic video where family members interact (passing red envelopes, cats ringing bells) before settling back into a posed photo, all while maintaining consistent lighting and facial identity.

- DiT Architecture: The model utilizes a Diffusion Transformer (DiT) path. This hybrid approach combines the logical understanding of Large Language Models (LLMs) with the detail-generation capabilities of Diffusion models.

- Inference Efficiency: Leveraging ByteDance’s Volcano Engine infrastructure, the generation time has been drastically optimized. High-definition generation can occur in as little as 2-5 seconds for short clips.

Industrial Impact

The release of Seedance 2.0 signals a restructuring of the creative industry:

- Democratization of "High-End" Production: What used to require a production crew of seven people working for a week can now potentially be achieved by a single creator in an afternoon.

- From "Toy" to "Tool": As noted by industry observers, the competition has shifted from parameter size to scenario application. Seedance 2.0 is designed to penetrate high-cost barriers in film, advertising, and e-commerce content production.

- Role Evolution: Repetitive labor (basic storyboarding, simple editing) will be automated, placing a premium on "AI Directors" who understand life, emotion, and storytelling rather than just camera optics.

Application Scenarios of Seedance 2.0

- AI Short Dramas: The improved consistency allows for narrative storytelling where characters look the same in Scene 1 and Scene 10.

- Dynamic Comics to Video: Static storyboards can be instantly converted into high-motion videos, as demonstrated by the "breaking the dimensional wall" test where a character travels through famous paintings (Van Gogh, Vermeer) with seamless stylistic transitions.

- Commercial Advertising: Rapid iteration of product showcases with precise control over brand colors and logos.

- Music Videos (MV): The audio-reactive capabilities allow for auto-generated visuals that sync perfectly with musical beats.

Challenges and Future Trends

Despite the excitement, professional objectivity requires us to acknowledge remaining hurdles highlighted in recent evaluations:

- Detail Stability: While vastly improved, occasional "hallucinations" in fine details still occur.

- Lip-Syncing: The current version still faces challenges with multi-person lip-syncing and occasional audio distortion in complex dialogue scenes.

- Complex Physics: While improved, highly complex interactions (e.g., intricate fluid mixing or chaotic collisions) can still result in minor physical law violations.

Looking Ahead: The industry is moving toward "World Models", which are systems that not only generate video but understand the underlying physics of the world, paving the way for AGI.

Availability

As of February 12, 2026, Seedance 2.0 has been launched on the Jimeng Video App, Doubao AI App, and Volcano Ark in China. Users can access it via the specific "Seedance 2.0" selection in the conversation or generation interface.

For developers and enterprise users looking to integrate these capabilities into their own workflows, ByteDance has indicated that the API is expected to be available soon.

KlingAIO.com will soon be releasing the official version of Seedance 2.0. You can try our Seedance 2.0 early access version now!